The REINFORCE Algorithm

In this short post, we give an introduction to the REINFORCE algorithm.

Attention and Transformers

In recent years, Transformer-based models are trending and are quickly taking up not only the field of NLP, but also computer vision and many other fields in AI. In this post, we give a tutorial on Transformer, and talk about several state of the arts models based on it,...

RNN, LSTM and GRU

In this post we introduce the recurrent neural network (RNN) model in natural language processing (NLP), as well as two improvements over it, long short-term memories (LSTM) and gated recurrent units (GRU).

Sequence Labeling with HMM and CRF

Sequence labeling is a classical task in natural language processing. In this task, a program is expected to recognize certain information given a piece of text. This is challenging, because even though input data items in real application can look similar, there may be small variations here and there...

Embeddings and the word2vec Method

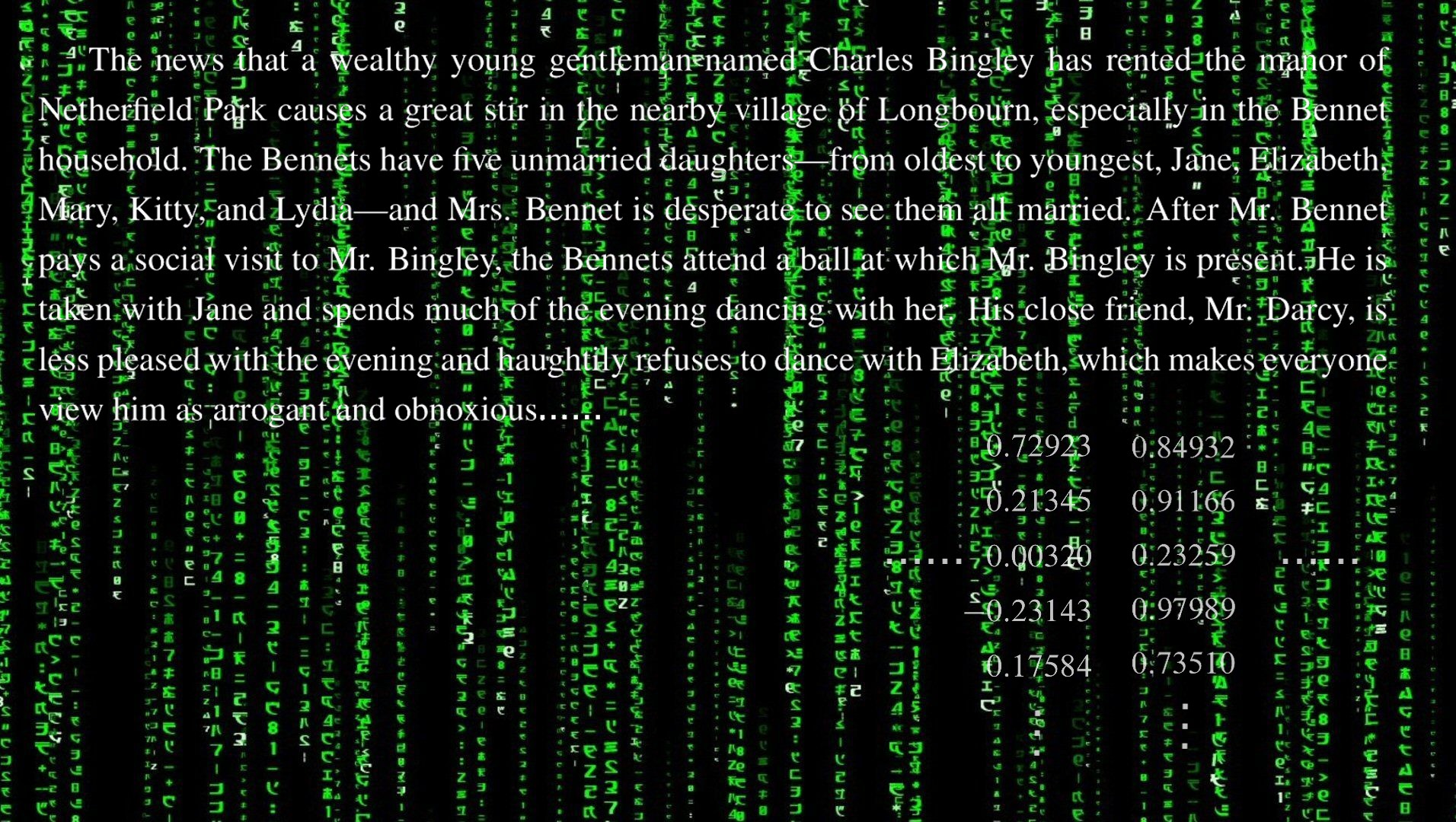

On its surface, words are not numbers. But words have similarities, differences, associations, relations and connections. For example, ‘‘man’’ and ‘‘woman’’ are words that describe humans, while ‘‘apple’’ and ‘‘watermelon’’ are fruit names. The flow of words in an article always follow certain rules. How to quantify a word...

Natural Language Processing, Probabilities and the n-gram Model

...This is the first one of a series of posts on Natural Language Processing (NLP). We give an introduction to the subject, mention two model evaluation methods, see how to assign probabilities to words and sentences, and learn the most basic model —— the n-gram model in NLP.